The Evolution of Computing: Harnessing the Power of Accelerated Computing, GPUs, AI, and Quantum Technologies

Introduction: The Evolution of Computing

General-purpose computing has been at the core of technological advancements for over six decades, with the rapid evolution of both hardware and software transforming industries worldwide. For the past thirty years, Moore’s Law has been a driving force behind the exponential growth of processor performance, enabling faster, more efficient systems without the need for drastic changes to software. This phenomenon led to massive benefits across every sector, from business and healthcare to education, entertainment, and beyond. However, as the performance of traditional Central Processing Units (CPUs) reaches its physical limits, the “free ride” that Moore’s Law once provided is coming to an end. In response to this, Graphics Processing Units GPUs have emerged as a game-changing solution, offering specialized acceleration for tasks such as real-time graphics rendering, artificial intelligence, and deep learning, driving a new wave of technological advancements that extend far beyond the capabilities of traditional computing.

As we approach the end of this era, we must reconsider our approach to computing. The traditional model, where hardware improvements could easily keep up with software demands, is no longer sustainable. This is where accelerated computing comes into play. Accelerated computing, combined with the power of machine learning (ML), artificial intelligence (AI), and quantum computing, is revolutionizing the way we solve complex problems and tackle some of humanity’s greatest challenges.

In this article, we explore how accelerated computing is reshaping industries, how AI is driving innovation, and how quantum computing may pave the way for a new era in technology.

Accelerated Computing: A New Paradigm

The breakthrough of accelerated computing was made possible by companies like NVIDIA, which introduced the graphics processing unit (GPU) as a new way to handle tasks that were once impractical for traditional CPUs. The development of GPUs allowed for real-time computer graphics, which revolutionized industries like gaming and design. However, the potential of GPUs extends far beyond graphics.

Accelerated computing is now making waves across a range of industries, from semiconductor manufacturing to computational lithography, simulation, and quantum computing. By reinventing the computing stack—ranging from the algorithms to the architecture—accelerated computing has enabled industries to solve problems that were previously too complex to address with general-purpose computing. In many cases, applications that would take hours or even days to run on CPUs can now be processed in a fraction of the time with GPUs and other accelerators.

GPUs: The Key to Real-Time Graphics and Beyond

One of the earliest and most well-known applications of accelerated computing is computer graphics. NVIDIA’s CUDA architecture, developed for GPUs, has made it possible to accelerate real-time graphics rendering. The rise of GPUs as a specialized processor for graphics has been a game-changer, not only for entertainment but also for industries that rely on high-performance computing (HPC) and AI. Today, GPUs are used in a wide range of applications, from medical imaging to climate simulations, and have become an essential tool in the drive to tackle complex problems.

Software 1.0 vs Software 2.0: A Shift in Development

The fundamental nature of software development has undergone a transformation over the last decade. Previously, software development was dominated by “Software 1.0,” where developers wrote code to solve specific problems using traditional programming languages like Python, C, or Fortran. In this model, programmers manually designed algorithms to perform computations and produce outputs based on a set of inputs.

However, this approach is being disrupted by “Software 2.0,” which leverages machine learning to automatically derive algorithms from massive datasets. In Software 2.0, the machine itself learns from the data, developing the function that predicts an output without human intervention. This is a paradigm shift in the way software is created and applied.

Instead of humans writing specific algorithms for every task, machine learning enables computers to study patterns in data and automatically generate models that can make predictions or perform tasks. For instance, deep learning models can be trained to recognize images, translate languages, or even predict future events based on historical data. These models can then be deployed on GPUs, which are optimized for the parallel processing required by deep learning algorithms.

Accelerating Industries with GPUs and AI

The ability to accelerate computing across multiple industries is revolutionizing not only software but entire sectors. By developing specialized AI and ML tools, NVIDIA has accelerated applications in various domains such as:

- Quantum Computing: Quantum computers leverage the principles of quantum mechanics to perform computations that are impossible for classical computers. By combining classical and quantum systems, accelerated computing helps us solve problems like simulating quantum materials or modeling complex chemical reactions, which are crucial for advancements in materials science and drug discovery.

- Computer-Aided Engineering (CAE): Engineers and designers have long relied on simulations to test and refine their ideas. With the power of accelerated computing, simulations can be run at a higher level of precision and speed, opening up new possibilities for design innovation in industries like aerospace, automotive, and energy.

- Artificial Intelligence and Machine Learning: AI has already made profound impacts in fields such as healthcare, finance, and transportation. GPUs are the backbone of deep learning, a subset of AI, which has enabled the development of applications like computer vision, natural language processing, and predictive analytics. The introduction of AI-powered agents, which can autonomously perform complex tasks, is further pushing the envelope.

Real-World Impact of Accelerated Computing

The practical applications of accelerated computing are vast. Some examples include:

- Autonomous Vehicles: Self-driving cars require real-time processing of vast amounts of data, from sensors like LiDAR to cameras and radar. GPUs and AI algorithms allow autonomous vehicles to process and interpret this data in real-time, making decisions that allow the car to navigate safely on the road.

- Healthcare: In medical imaging, GPUs enable doctors and researchers to process scans faster and with more accuracy. Additionally, AI is helping accelerate drug discovery by simulating complex biological interactions that would otherwise take years to test in laboratories.

- Energy and Sustainability: Accelerated computing is also making a significant impact on the energy sector. By simulating and optimizing energy grids, engineers can design more efficient and sustainable systems. GPUs are also being used to model climate change, enabling scientists to predict and respond to future environmental challenges.

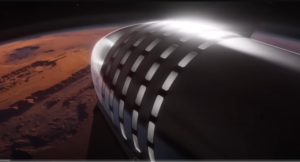

Quantum Computing: The Next Frontier

While accelerated computing has already made significant strides, the future of computing will be shaped by the advent of quantum computing. Unlike classical computers, which use bits to store information as either 0 or 1, quantum computers use quantum bits or qubits, which can represent multiple states simultaneously. This ability to process information in parallel opens up the potential for solving problems that would be intractable for classical computers.

In particular, quantum computers have the potential to revolutionize fields such as cryptography, materials science, and complex simulations. The combination of classical and quantum computing, known as hybrid computing, holds promise for solving problems that require massive computational power.

The Future of Accelerated Computing: Challenges and Opportunities

While the potential for accelerated computing is immense, there are still several challenges to overcome. One of the biggest challenges is the need for software developers to rewrite their code to take advantage of the parallel processing capabilities of GPUs and other accelerators. This requires significant collaboration between hardware manufacturers, software developers, and industry stakeholders.

Additionally, as the size of AI models continues to grow, the demand for computational power is increasing at an exponential rate. This has led to a “scaling law,” where the amount of data processed and the size of models double every year, creating a need for more advanced hardware systems like NVIDIA’s Blackwell architecture.

Despite these challenges, the future of accelerated computing looks bright. As hardware and software continue to evolve, we can expect further breakthroughs in AI, quantum computing, and other fields. The next decade will be crucial in shaping the future of technology, and accelerated computing will play a pivotal role in driving that transformation.

GPU Performance and Industry Growth

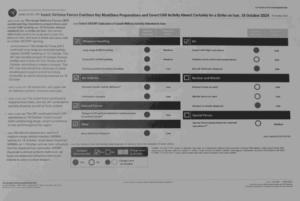

| Industry | Application | GPU Acceleration | Performance Improvement |

|---|---|---|---|

| Autonomous Vehicles | Real-time data processing | GPUs for sensor fusion | 50x faster data processing |

| Healthcare | Medical imaging, drug discovery | AI-powered diagnostics | 10x faster analysis |

| Energy and Sustainability | Energy grid optimization | Climate simulations | 20x faster simulations |

| Artificial Intelligence | Deep learning model training | Neural networks on GPUs | 30x faster training |

Conclusion: The Road Ahead for Accelerated Computing and AI

The future of computing is accelerating at an unprecedented rate, fueled by advances in accelerated computing, artificial intelligence, and quantum technologies. As Moore’s Law approaches its limits, industries must adapt and innovate to sustain progress and continue solving the world’s most complex problems. This is where accelerated computing, powered by specialized hardware like GPUs and AI-driven software, plays a crucial role in reshaping industries and driving breakthroughs in every sector.

With the success of accelerated computing in fields like graphics, healthcare, energy, and autonomous vehicles, the possibilities for further innovation are vast. The integration of machine learning and AI, along with the emerging power of quantum computing, is set to deliver even more significant gains in computational power. As hardware becomes more powerful and software evolves with machine learning techniques, the way we approach computing will transform entirely, leading to faster problem-solving, more efficient systems, and new technological capabilities.

However, the journey is not without challenges. Rewriting software to take full advantage of these technologies, as well as scaling up hardware to meet the growing demands of AI and quantum models, will require continued collaboration and innovation. But with the momentum building in the tech world, it is clear that the future of computing is poised to be more impactful and transformative than ever before.

As industries continue to integrate AI, machine learning, and quantum computing into their workflows, accelerated computing will continue to be the backbone of technological progress. The next decade promises to be a period of exponential growth, as we harness the power of these advanced technologies to solve the world’s greatest challenges, from climate change to healthcare and beyond.

Frequently Asked Questions (FAQs)

1. What is accelerated computing and how does it work?

Accelerated computing refers to using specialized hardware, such as GPUs, to offload specific computing tasks that traditional CPUs can’t handle efficiently. These specialized processors are optimized for parallel processing, enabling them to perform complex tasks much faster than CPUs. Accelerated computing powers AI, deep learning, simulations, and real-time applications across various industries.

2. How has AI impacted computing?

AI has transformed computing by automating the process of learning and making predictions from massive datasets. Instead of writing specific algorithms, developers use machine learning to train models that can automatically learn patterns and make decisions. This has led to significant advancements in fields like healthcare, autonomous vehicles, and financial modeling.

3. What is the relationship between GPUs and AI?

GPUs are designed to handle parallel tasks efficiently, making them ideal for running AI models, particularly deep learning models, which require processing large datasets. GPUs enable faster training and inference for AI applications, significantly speeding up the development of AI systems in industries like robotics, healthcare, and natural language processing.

4. What is quantum computing and how does it relate to accelerated computing?

Quantum computing leverages quantum bits (qubits) to perform calculations that classical computers cannot. Unlike classical bits, which are either 0 or 1, qubits can represent multiple states simultaneously. While quantum computing is still in its early stages, it holds the potential to revolutionize industries like cryptography, materials science, and complex simulations when combined with classical computing systems, enabling hybrid solutions.

5. How is accelerated computing used in real-world applications?

Accelerated computing has widespread applications in industries such as:

- Autonomous Vehicles: Real-time data processing for self-driving cars.

- Healthcare: AI-powered medical imaging and drug discovery.

- Energy: Optimizing energy grids and simulating climate change.

- AI: Speeding up deep learning model training for tasks like language translation, image recognition, and decision-making.

6. What are the challenges of accelerated computing?

One of the main challenges is adapting existing software to take advantage of GPUs and other accelerators. Many industries need to rewrite their code to optimize it for parallel processing, which requires a significant amount of time and collaboration between hardware manufacturers and software developers. Additionally, the growing size and complexity of AI models increase the demand for even more powerful hardware systems.

7. What does the future of accelerated computing look like?

The future of accelerated computing is incredibly promising, with continued advancements in AI, quantum computing, and specialized hardware. As the demand for computational power grows, accelerated computing will play a pivotal role in solving increasingly complex challenges across all sectors. The continued evolution of machine learning, neural networks, and quantum technologies promises to usher in a new era of computing that will reshape the technological landscape.

Post Comment